Post-mortems

Please keep in mind that these examples are cherry-picked from my worst predictions. (Worst in the sense that someone with access to the same information at the time could have pointed out my mistake.)

Long COVID post hospitalisation

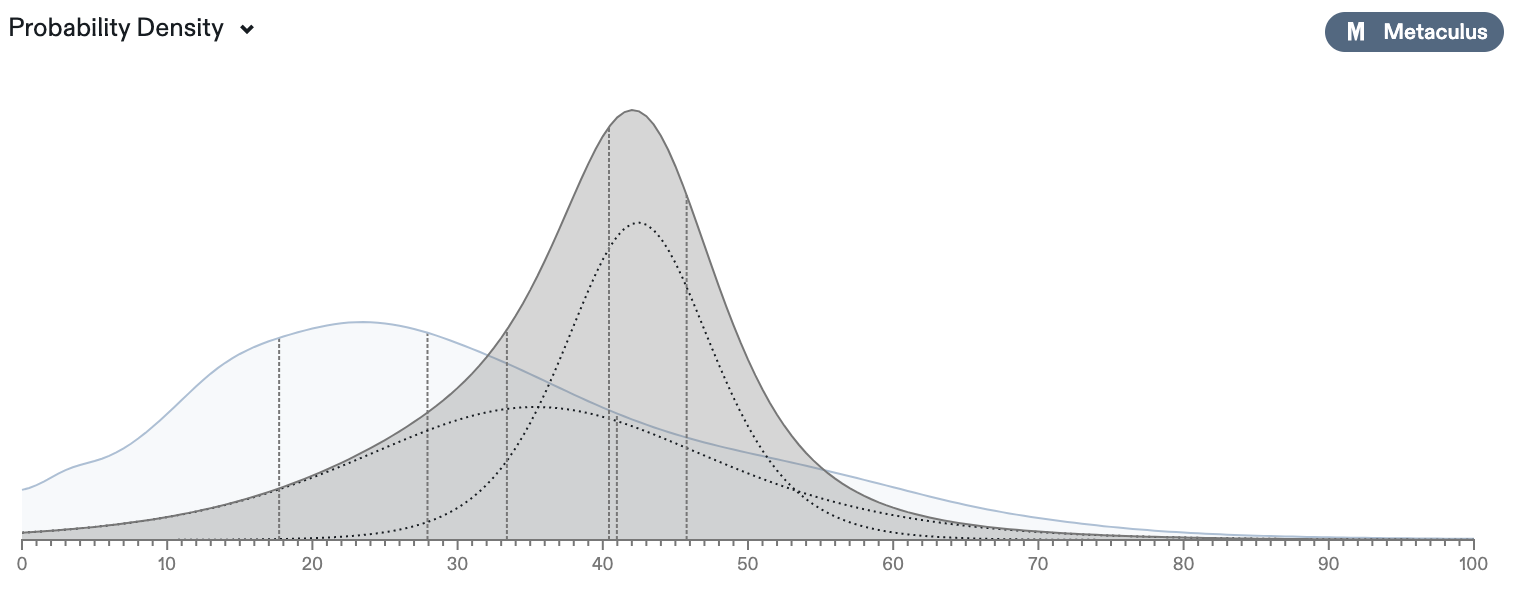

One of my first predictions on Metaculus for which my reasoning can be found here. At the time of writing this question hasn’t resolved yet, so what went wrong? I came up with a simple model which deviated significantly from the community forecast and then shifted the pdf a bit to the left to account for the possibility of the community knowing something I didn’t. Despite deviating from the community in what looks like the right direction, I completely failed to think about the tails of my distribution, however, which could potentially prove fatal. This seems to be a case of being too invested in one particular model without accounting for the possibility of model failure.

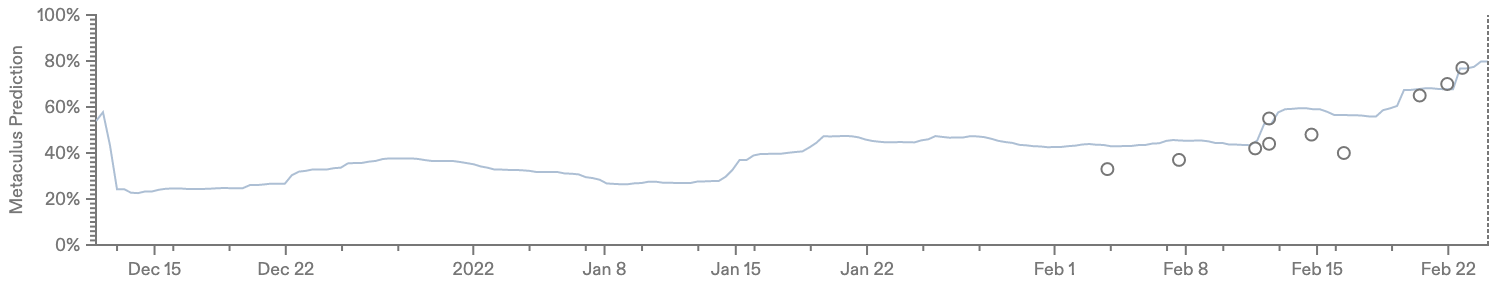

Russian invasion of Ukraine before 2023

A lot is to be said about this question; see arguments for an invasion as early as in December, base rates, track records of media & US intelligence calling it relatively early, “good” forecasts (on the invasion) correlating strongly with “bad” forecasts (on the invasion’s success) (and vice versa).

In short, there were signals pointing in different directions and even in hindsight it doesn’t seem obvious what a good forecast should have been. However, I can identify where I went wrong and this was relying too much on the following heuristic:

At the end of the day, once the sabre-rattling is done, leaders/nations typically end up not doing anything that’s grossly misaligned with their economic goals.

This heuristic worked in the past for questions about OPEC production cuts, countries’ reactions to abuse by their biggest trading partner, etc. Two possible reasons for it to fail come to mind:

- This heuristic is simply wrong or doesn’t apply to leaders whose plan is to go down in history instead of being reelected (fairly).

- Like many Western (often conservative) forecasters who were more confident about an impending invasion, Putin believed that the invasion wouldn’t be met with much resistance, neither by Ukrainians, nor in the form of economic sanctions; so in his eyes this might not have been misaligned with economic goals at all.

The takeaway thus seems to be to

- account for other (more personal) motives, especially in a non-democratic environment,

- account for leaders surrounding themselves with people telling them what they want to hear (in particular not having access to good intelligence services),

- take the Madman theory a bit more seriously.

When will the UK reach herd immunity >53.3m for Covid-19?

A classic: I predicted on the title, while the resolution criteria actually state that this question will resolve as the date when a credible media report stating that the >53.3m immunity threshold has been reached is published, rather than the date when this threshold is reached.

The takeaway is obviously to read the resolution criteria carefully and take into account how they might fail to capture the intended question. I actually started doing this and it didn’t take too long before I stumbled upon a similar problem: If a question resolves ambiguously under some conditions, then the question effectively becomes a conditional probability, which might skew probabilities a lot.

A much more extreme example: I was told about a real money (crypto) market on whether Biden would be president at some date. Clearly this was supposed to be a proxy for whether Biden would win the election versus Trump, but the date was one year in the past! Clearly a typo, but that didn’t keep traders who didn’t read carefully from betting (and losing) a lot of money on it.